When the iPhone was announced in 2007 Steve Jobs introduced it by saying that Apple was introducing three revolutionary products:

- Widescreen iPod with touch controls

- Revolutionary mobile phone

- Breakthrough internet communications device

At the time this seemed to cover the main functions that people would find useful on mobile phones, applications or “apps” were not yet a thing that most people cared about as most mobile phones of the preceding era had pretty clunky interfaces and getting hold of apps was controlled pretty firmly by the mobile networks.

The iPhone didn’t initially support a way for developers to make native apps, instead offering only the “sweet solution”1 of web apps in Safari. Before too long a native SDK was released in 2008, the App Store opened for business in July of that year and allowed developers to release native apps, and the beginnings of the initial app boom era started.

So those “three revolutionary products” were joined by a fourth one: a “handheld game console“. In December 2008 Apple announced the top 10 paid apps in the App Store, of those 10 the top 7 of them were games:

- Koi Pond2

- Texas Hold’em

- Moto Chaser

- Crash Bandicoot: Nitro Kart 3d

- Super Monkey Ball

- Cro-Mag Rally

- Enigmo

- Pocket Guitar

- Recorder

- iBeer

One notable thing about the first iPhone that may seem odd now was that the camera was only a stills camera, no video support, no flash, no exposure compensation; not only that but the camera used a tiny 2 megapixel sensor. Prior to the iPhone release phones such as the 2006 Nokia N95 came with a 5MP camera, supported video and had a flash. So this aspect of the iPhone was really only providing the bare-bones, minimal requirements for taking photos. You definitely couldn’t add “Digital SLR quality camera” to that initial trifecta of devices that Steve introduced the iPhone with.

Fast-forward to 2024, the iPhone cameras have improved amazingly, the front-facing “selfie” camera alone on the iPhone 16 (first added with the iPhone 4) now takes 12MP photos and can shoot 4K video. Depending on which version of the phone you get two or three main cameras, with the iPhone 16 Pro sporting 48MP sensors with regular, ultra-wide and telephoto lenses. The iPhone is now one of the most popular cameras in the world, not just “phone cameras” but cameras in general. The iPhone, along with other smartphones, disrupted the camera industry3, even with the much poorer quality cameras in the earlier phones.

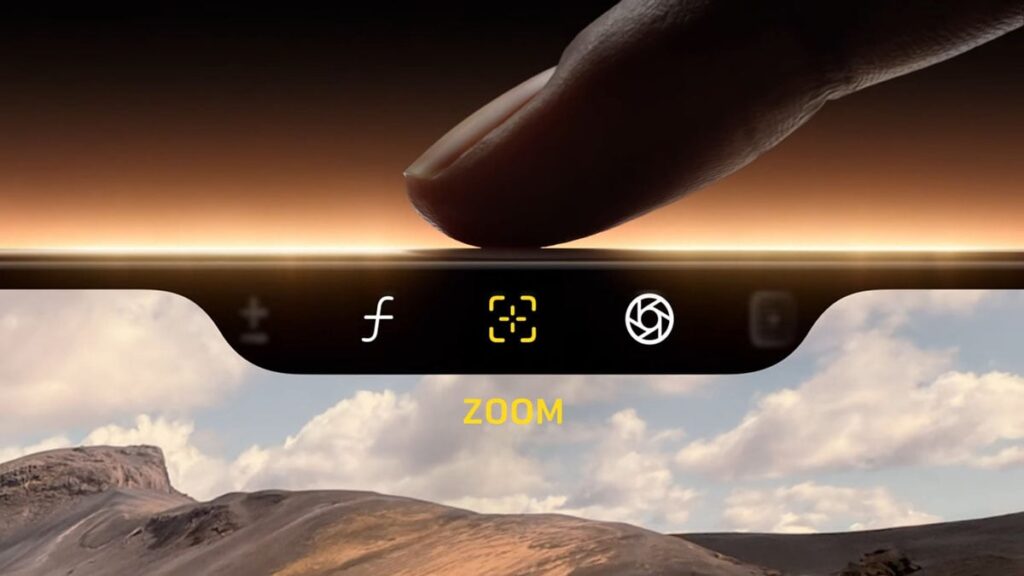

Whilst the camera(s) on the iPhone have been incrementally improving, year-over-year with each new model, this year sees an interesting new addition to both the regular and pro versions of the phone; the new “Camera Control” button. The Camera Control button is found on the side of the phone and is specifically for controlling the camera functions and provides a way to quickly access camera tools like exposure, depth of field and zoom4. The button is both a physical button and touch-sensitive control so you can press and slide sideways to navigate, adjust and select settings.

Apple has been touting their new “Apple Intelligence” AI-based features along with the release of these new phones, but I think the Camera Control is the most interesting feature on these new iPhones5. The camera has been an important feature of the iPhone for some time now and has become many people’s only camera that they use for taking photos and shooting video (even being used for making actual movies). But the addition of the Camera Control seems significant to me, in that Apple increasingly views part of its core functionality to be an actual camera, not just a phone with a camera on it.

So it seems that alongside the earlier addition of “handheld game console” to the initial three products that comprised the first iPhone we can now definitely add “High resolution mirrorless camera” to that list of “products” that makes up the iPhone.

Steve Jobs had a clear idea of the three core products that the iPhone was going to disrupt when he introduced the original iPhone, but I think the introductory slide would be something more like this had he envisaged gaming and photography becoming such a core function of the device6:

- MacStories wrote a good article looking back at the “sweet solution” back in 2018. ↩︎

- Ok, some might nit-pick that Koi Pond isn’t a game, but it’s definitely not a productivity app :) ↩︎

- Estimated to be an 87% drop in digital camera sales since 2010! ↩︎

- At the time of writing Apple has said additional functionality is coming in a future software update: “Camera Control will introduce a two-stage shutter that lets you automatically lock focus and exposure with a light press“. ↩︎

- Also, Apple Intelligence technically isn’t out at the time of writing, only with iOS 18.1 and subsequent point updates will it incrementally become available. ↩︎

- Is a “phone” still a core part of a smartphone device now? Arguably “internet communication” has replaced much of the traditional phone usage now. ↩︎

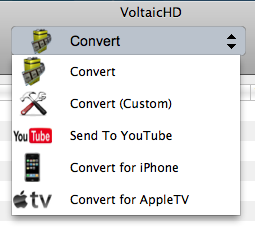

Along with the new preview / edit capability there is also the ability to convert and upload trimmed clips to YouTube directly from VoltaicHD 2.0. There are also preset output options for iPhone / iPod and AppleTV.

Along with the new preview / edit capability there is also the ability to convert and upload trimmed clips to YouTube directly from VoltaicHD 2.0. There are also preset output options for iPhone / iPod and AppleTV.